More than a texture patch? (Part 2)

In the second part of this series, we will look at the problems that arise when displaying textures in three-dimensional space, and their solutions.

In order to display a figure covered with a texture, the relevant pixels of the texture must be determined for each dot of the monitor and an average value must be calculated from them. Let's take the earth as an example. From a distance, it appears blue like the oceans and takes up little area on the screen, it consists of only a few dots. On the other hand, many pixels of the texture contribute to the representation of a single dot. If we now reduce the distance to a usual size, we can see the continents and their characteristics. The earth now already occupies the whole area of our imaginary, square screen. Nevertheless, there are now fewer pixels contributing to each dot than a step before. Next, we move a little closer and try to recognize the city in which we live. Individual pixels of the texture slowly become recognizable, so there is only about one pixel left per dot. Finally, we move in very close and look for our house, but we can't see it because of the limited resolution of the texture. We have reached the minimum viewing distance, each pixel is already shown in several dots of the screen. The visual impression caused by this phenomenon is commonly referred to as "pixelated".

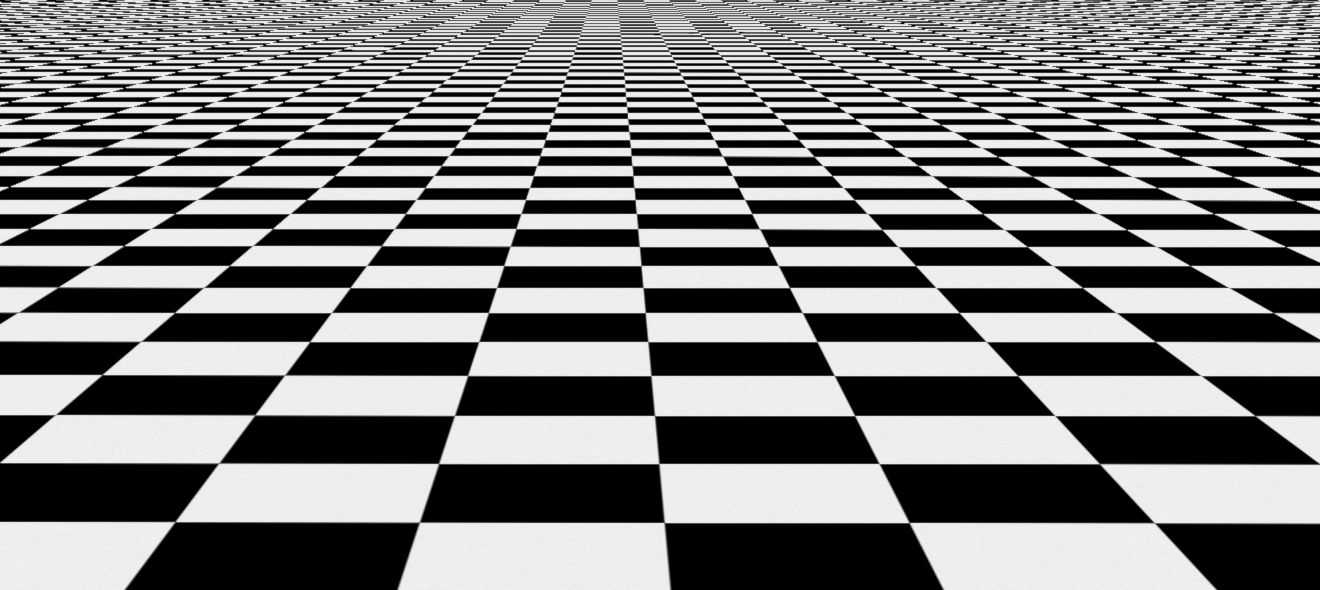

So, the viewing distance determines how many pixels are relevant to a dot. Accordingly, large distances lead to a high computational effort. However, this is not the only problem. Due to the number of pixels and the resulting color variance, it becomes increasingly difficult to calculate a representative average value for the pixels. Therefore, especially with planar faces, the alias effect occurs, which manifests itself in the upper area of the following image as a so-called Moiré pattern. It can be clearly seen that the black and white fields no longer alternate in distance although they should.

If the colors of the relevant pixels are close to each other, then the average is also close to the individual pixels and thus representative. However, the situation is different if the colors are far apart, such as black and white in the image above. Then the calculated average values also differ more greatly, which leads to "color jumps". To overcome this effect, a technique called mipmapping is used in computer graphics. To do this, copies of a texture are created, each copy being only half the size of the previous one. So if the original texture is 1024*1024 pixels, the first mipmap is 512*512 pixels, the next one 256*256 pixels and so on until the last one, which finally has a size of 1*1 pixel. In this one-time operation, we calculate the average values in advance. In doing so, we can also use higher quality and more expensive algorithms than would be feasible at runtime of the game.

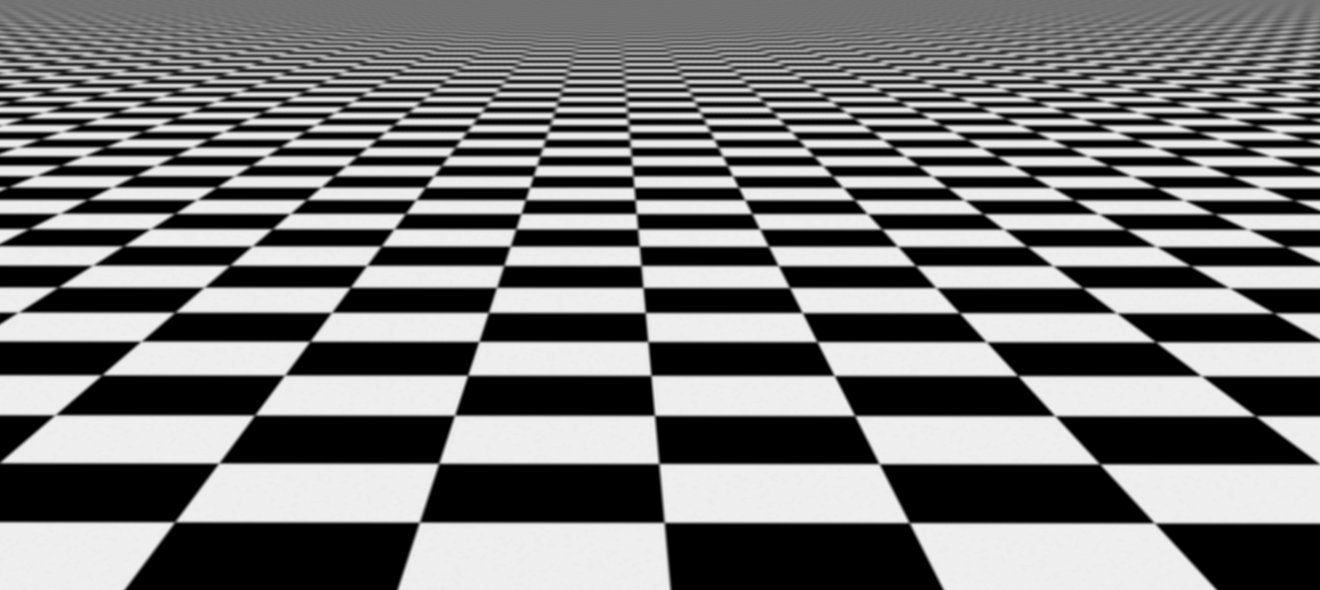

Now the original texture is no longer used exclusively for rendering, but depending on the distance, one of its smaller copies is, which has a more appropriate number of relevant pixels. This significantly reduces the computational effort per dot and the alias effect no longer occurs. As a side effect there is blurring, which is greater the farther away the point in question is.

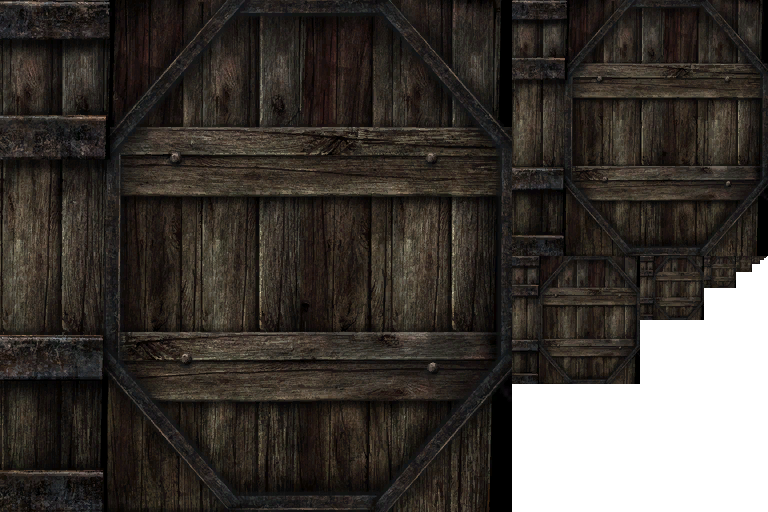

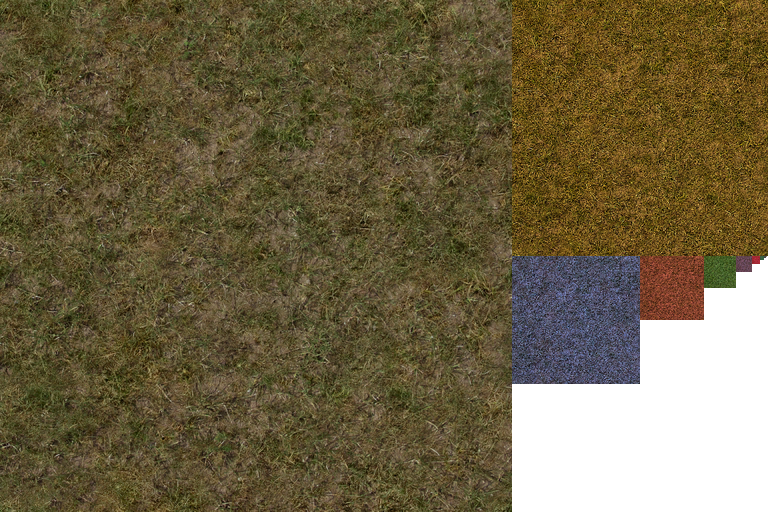

To be able to see which mipmap Gothic uses at which distance, I colored the mipmaps of the grass texture differently.

By the way, this experiment took place during one of the modder get-togethers, so some of the attendees couldn't help but admire this "rainbow grass". Admittedly, it has a psychedelic touch.

Anyway, the sobering realization was that the original texture was hardly ever used for rendering, but almost always one of the mipmaps. This conclusion can be drawn from the fact that the grass on the screenshot has a normal color only at the bottom of the screen. The reason for this was soon found: Gothic does not use anisotropic filtering (AF). This is a blur reduction technique that takes into account the angle between the camera and the face in question, in addition to the viewing distance.

It should be said that Gothic's graphics engine is based on DirectX 7, and this technology was already available at the time. However, in Gothic there was no way to enable it and this could not be changed with the means of traditional Gothic modding. That's why I forced the anisotropic filtering via the driver of my graphics card. As you can see, the original texture was then used in a larger radius, the individual mipmaps are blended better and the sharpness is maintained even in depth.

Degenerated, the original author of the D3D11 renderer, had some time later written a plugin for Gothic that removed a vertex limit that was grave for us. I then extended this plugin and, among other things, enabled anisotropic filtering. Although this increases the computational effort again, with modern graphics cards you will hardly notice any difference in performance.

The following - admittedly somewhat older - screenshot compares the standard Gothic with our optimized version once more for conclusion. I want to emphasize that these are the same textures, which are just rendered differently.

By the way, some time later the SystemPack was released, which included anisotropic filtering along with lots of bug fixes, and Union, which adopted the plugin architecture and simplified the development of new plugins.

In the next part of this series, we will talk about the RGB color model and the rendering of transparent pixels.